128 bits of salvation

During 1998 the graphics industry was adopting the 0.25 um manufacturing process for bleeding edge chips. And many gave up on the first attempts because the node did not turn up mature enough. One company that stubbornly stuck with it was ATI, delivering their completely new architecture named Rage 128. This design no longer carries traces of the Mach64 legacy. While it may be called by its code as the fourth generation of Rage 3d chips, it is the most radical change so far. The number 128 refers to the width of both the memory interface and engine, be it 2d or 3d pipeline. This doubles the performance of the previous Rage Pro. Despite the transistor count being more than doubled the Rage 128 is clocked faster than Rage Pro. The number of caches (at least the promoted ones) was also doubled. Besides the good old texture cache, a new cache was added between render output and video memory. ATI called it Pixel cache and it was one of the known sources of delays. All this memory bandwidth serves two independent pixel pipelines, that can together output two textured and bilineary filtered pixels per clock. The Rage 128 with its massive 8 million transistors doubles the Rage Pro's performance at all the important metrics and remedies its shortfalls at once. The chip was probably the biggest graphics hardware engineering effort of 1998, but high ambitions do not always pan out.

Bad news first

The first silicon ATI provided to partners had many serious drawbacks. One may wonder what was the cause of this embarrassing situation and follow-ups. Whether the manufacturing process is to blame or validation on the side of Ati wasn't up to the task. The fact is, that the first chip was not even stable, and changes in the metal layer changes were needed. That was September 1998, if things were working as intended Nvidia's dual pipeline TNT could be dethroned by the Rage 128. But fixes need time. One month later a newer revision is distributed, but still only as an engineering sample. Clocks are near the target, sometimes even without overvolting. But because of bugs with the Pixel cache a new revision was needed anyway. That is why, only in November a production revision finally appears. Ati is claiming 100 MHz engine clock and 125 MHz for memory. Even 143 Mhz, should only a 64-bit connection be used. Those are respectable numbers, keep in mind the main competitors were clocking at around 90 MHz. The boards sent to the reviewers ran at 103 MHz, but rarely could you find numbers close to that in retail. And it wasn't about Ati giving worse versions to the board partners. The chip wasn't fixed enough yet! How do you package the news of 10 % lower clocks as a Christmas present? Maybe a return to nominal voltage can be the silver lining. But are you stable yet? So in January 1999, a new revision came and along with it Ati said sorry, we really need higher voltage to keep us stable. But the story still wasn't over because the Pixel cache causes yield issues, so let's hope we can produce more of the next revision...

You might have noticed, there is quite a story behind the Rage 128 becoming more of a 1999 product rather than 1998. Those who want can shape it into a comedy or tragedy. The Rage 128 kept arriving in many card variants. I will be first to admit that mapping the 90s Ati products was never easy, and perhaps here the difficulty peaks.

Two chip variants and why VR

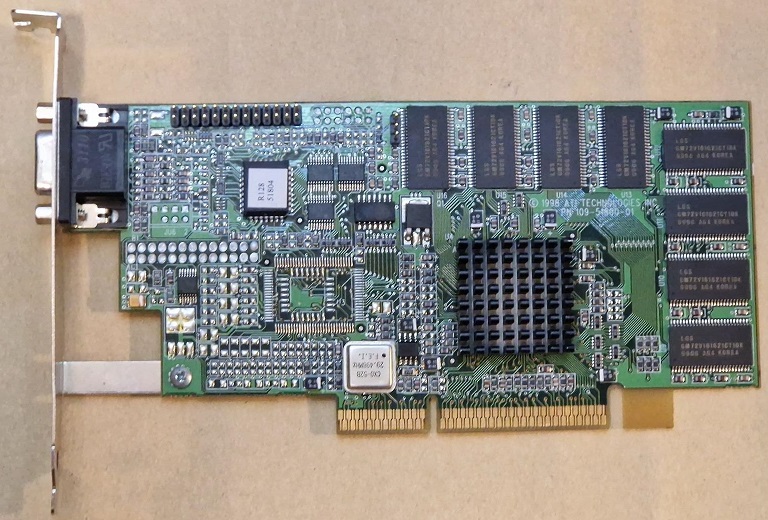

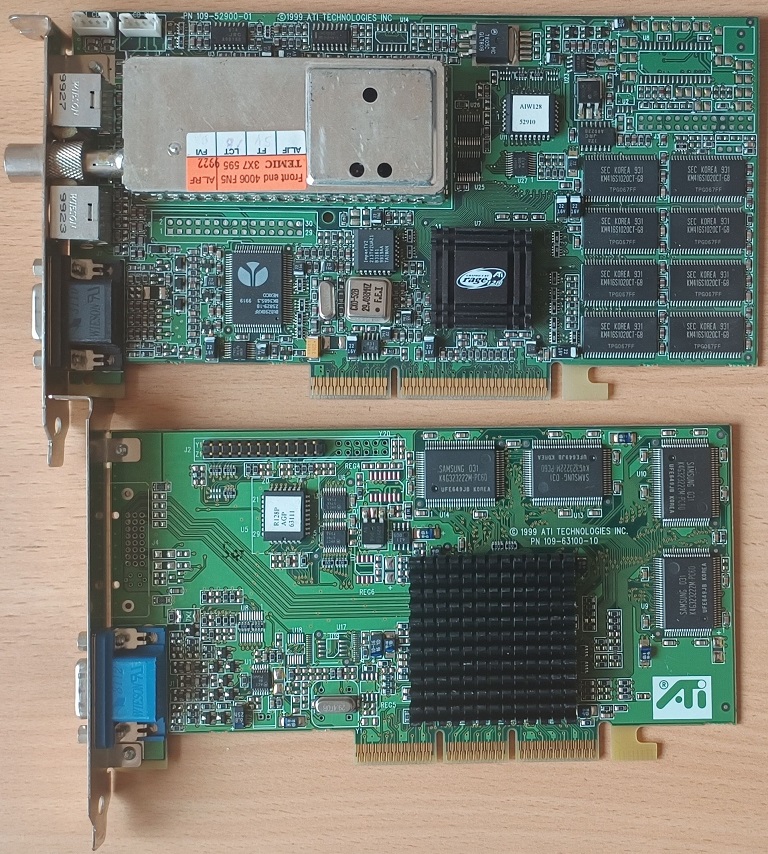

The Rage 128 was offered in two main sorts: GL and VR. The GL has a full 128-bit memory bus and the memory is clocked synchronously with the chip. The flagship card was Rage Magnum equipped with 16 or 32 MB of SGRAM. Then there were 128-bit cards simply called "Rage 128" with simpler SDRAM memory limiting their performance at high resolutions.

Your basic Rage 128 GL card running at 90 Mhz.

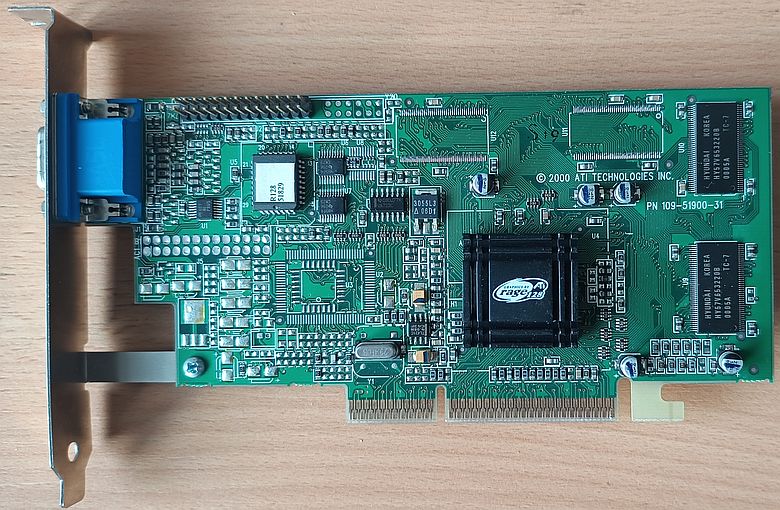

The card tested is this 16 MB variant with a 64-bit memory bus.

The card tested is this 16 MB variant with a 64-bit memory bus.

The cheaper VR with reduced pin count, has only a 64-bit bus, but some of the bandwidth loss can be compensated by memory ticking at a 3:2 ratio to the chip clock, up to 125 MHz for SGRAM or 143 MHz for SDRAM. According to documentation the VR also supports DDR memory, which could be industries first, but I found no cards equipped with the memory type. Probably at the time the extra cost of DDR would be more than what 64-bit boards would save. The official Rage 128 PC product stack seems to be this:

| Rage Magnum |

| Rage Fury |

| Xpert 128 |

| Xpert 2000 (64-bit) |

| Xpert 99 (64-bit) |

The clock of these are typically 90 MHz except for certain series of the Rage top dogs. The Xpert cards were more focused on office use. Xpert 128 was limited to 16 MB and Xpert 99 to 8 MB of memory. Just because a card is a 64-bit one does not mean it has the VR chip. If you need a simple tip discerning Rage 128 from the later Rage 128 Pro line-up, take note of heatsink size- Rage 128 Pros cards made by Ati have them bigger than the chip.

On top of those, there were bulk cards simply called Rage 128. Those could have clocks below 90 MHz. The Magnums were reviewed a lot but VR cards did not get much spotlight. Filling the gaps in knowledge is what I like to do the most, so here we go. The card tested has the core clocked at a feeble 80 MHz, the lowest I found. Are there other benefits to reviewing one of the slowest Rage 128 cards? It allows for a very straight comparison of improvements made between generations. The bus width is the same and the chip clock is quite close to both Rage Pro and Rage XL.

Architecture

The story of ATI's 3d chips so far was promising but not exactly a success. From the first 3D Rage which hardly accelerates any games to a very compatible but slow Rage II family (R2 chips) to Rage Pro family (R3) with a big performance uplift. Here comes the fourth generation with its record-breaking transistor budget to aim even higher. The doubling of pixel pipeline and memory bus width is merely the most obvious part of another big jump in 3d rendering speed.

What are all the transistors of R4 doing? We are dealing with a very advanced design ready for 32-bit depth rendering at "full speed", at least in theory. On the video front, everything for hardware DVD playback is in. Ramdac speed is 250 Mhz and the card handles 1920x1200 3d resolution just fine. At the very start sits the Concurrent Command Engine, the granddaddy of today's Command processor, ready to reorder commands coming out of the queue to feed internal registers as fast as possible, enabling processing of more commands at once. Of course, the full triangle setup engine the Rage Pro pioneered is featured in Rage 128 as well, just with performance doubled keeping the triangle-pixel ration same. The setup engine, supporting triangle strips, prepares twice as many triangles as Rage Pro could at the same frequency. Perhaps memory bandwidth can hurt the rate a lot since the tested card often does not seem to be able to produce more small triangles than R3. And being that constraint by memory bandwidth she may not always fill very large triangles faster either. Subpixel accuracy stayed at 4 bits but this is all the ties the Rage XL can have. There was no problem with the mip-map selection at all. The texture cache was doubled to 8kB, a leading value in 1998. The texture units are still single-cycle bilinear texel capable, therefore expect a performance hit from the trilinear filter, which halves the theoretical fillrate. In the real world, it shouldn't be more than 20 %. Although the bilinear filter is still not fully precise, at least it provides more gradients. It can lead to visible banding on extremely magnified textures, which is rare. The blending output has no problem filtering alpha textures. It is here between ROPs and memory ports where the pixel cache delivers its bandwidth-saving benefits. It can provide destination, source, and Z values for blending calculations and the operations themselves, saving reads from memory. It can also keep the destination value for multiple operations, saving a memory write(s). Z-buffer depth can be set to 16, 24, or 32 bits. The overall 3d image quality is great and has no 16-bitness older Rages suffered from. With one exception.

Experience

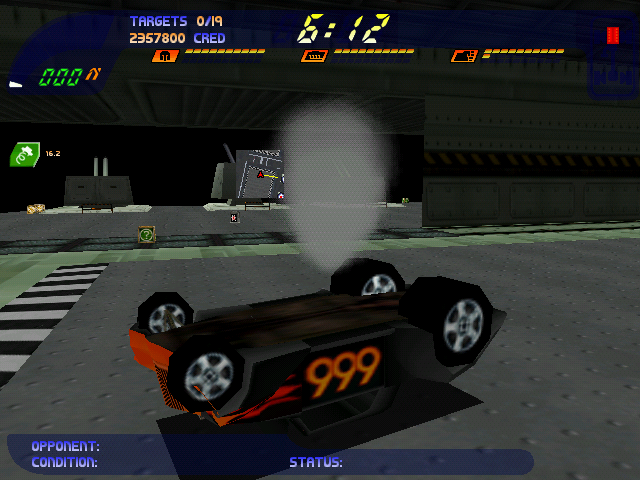

There is one alpha blending mode that produces a distracting dithering pattern. It is plainly visible in Viper Racing or Carmageddon II:

The transparent smoke ended up being rendered at anything but true color precision.

The transparent smoke ended up being rendered at anything but true color precision.

So far the card was tested with only one driver- the latest beta I could find, version 7192. I tried a few older drivers but they did not improve the pathological cases. The beta version is probably the cause of problems in high-profile games like Quake 3, which wasn't even stable at high-quality settings and produced artifact at the bottom right of the screen. GlQuake looks way too dark, implying wrong selection of blending mode for lightmaps. It is not all bad news for the OpenGL though, it suits even obscure games like Z.A.R. just fine. Rather shocking was that no Rage 128 card was compatible with the usual test motherboard based on Intel 815. That is why a different system was used, a VIA KT133 motherboard with a Duron 750 CPU. On the Direct3d front, it was a smooth sail until Conquest: Frontier wars. In this title the textures were missing to such a degree I did not dare to include the result. We are also entering the age when older features can be broken. Although the driver came with GUI for switches to enable table fog and paletted textures, Shadows of the Empire and Final Fantasy 8 did not show either of those in action. Having the switches in the control panel shows confidence in those features, therefore they probably worked, just not with the beta driver used for the review. One inexcusable bug is faulty character rendering in Grim Fandango, too many parts simply go missing.

For a detail look head to gallery.

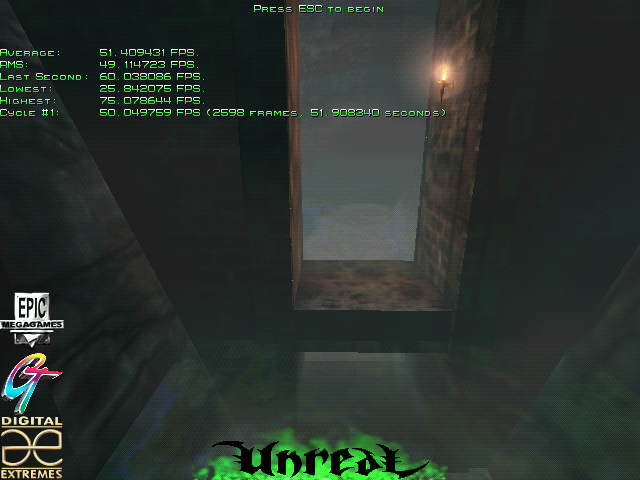

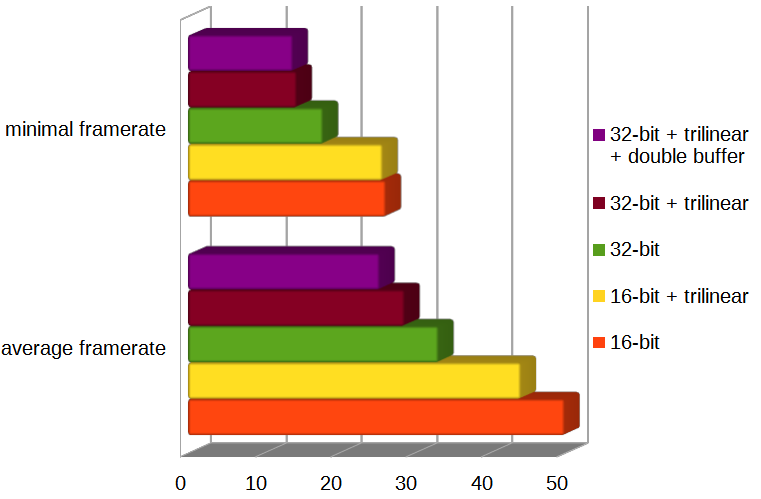

Since the Rage 128 is so well prepared for 32-bit rendering let's examine what it brings to the table. In the following Unreal screenshot we start with 16-bit colors and the fog triggers the ugly dithered blending. Click to see the 32-bit image next.

Do ignore the dotted line at the top of screenshots, it does not occur during actual gameplay.

Do ignore the dotted line at the top of screenshots, it does not occur during actual gameplay.

At true colors, the dithering is gone and banding is minimized. You can notice the few weirdly white pixels from 16-bit mode are gone. This is not an accident, true color frame buffer triggers also higher Z-buffer precision. In Unreal, the usual 16-bit depth precision is inadequate for some fine geometry details and/or distant objects. With a switch to 32-bits, such z-fighting is eliminated as well. But what is the price of such features?

The tested card suffers a decreased framerate of one-third. I wanted to try to soften the true color blow by reducing the z-buffer precision, but despite limiting it via the control panel, nothing changed. Another control panel failure there, I wonder whether older drivers worked. Anyway, trilinear filter always comes with its own cost. After enabling all of that and sinking the framerate quite low, switching from triple buffer to double buffering on top of that still incurred measurable penalty. If you want all that quality and small input lag, be ready for halved framerates. By the way, the penalty of double buffering at the speeds of default settings was much, much higher. The OpenGL control panel is functional and thanks to that the impact of Z-buffer precision can be tried. Quake 3 at High Quality settings ran at 20,6 frames per second with a 24-bit depth. Limiting it to 16 bits improves the result by 10 % and since no graphical degradation was found the faster result was used. With the 64-bit Rage 128 cards the quality features are probably too costly for an average user. In this sense, ATI's GL/VR market segmentation works, unfortunately for the consumer with a 64-bit card. But I want to finish on a higher note. Overall, the 3d image quality of the Rage 128 is almost there with the renowned G200, if you can avoid the dithered modes.

Even complex scenes such as these do not cause any trouble.

Even complex scenes such as these do not cause any trouble.

Performance

First let's see how the card stacks up against the best of previous architecture, the Rage XL:

And that is the result of the geometric mean which lowers the differences. Considering how much the old suit is hitting vsync limits it is clearly much faster. Even the slowliest Rage 128 card easily outdoes the older architecture in every way. That is why the XL was the new low-end and the 64-bit Rage 128 cards were midrange.

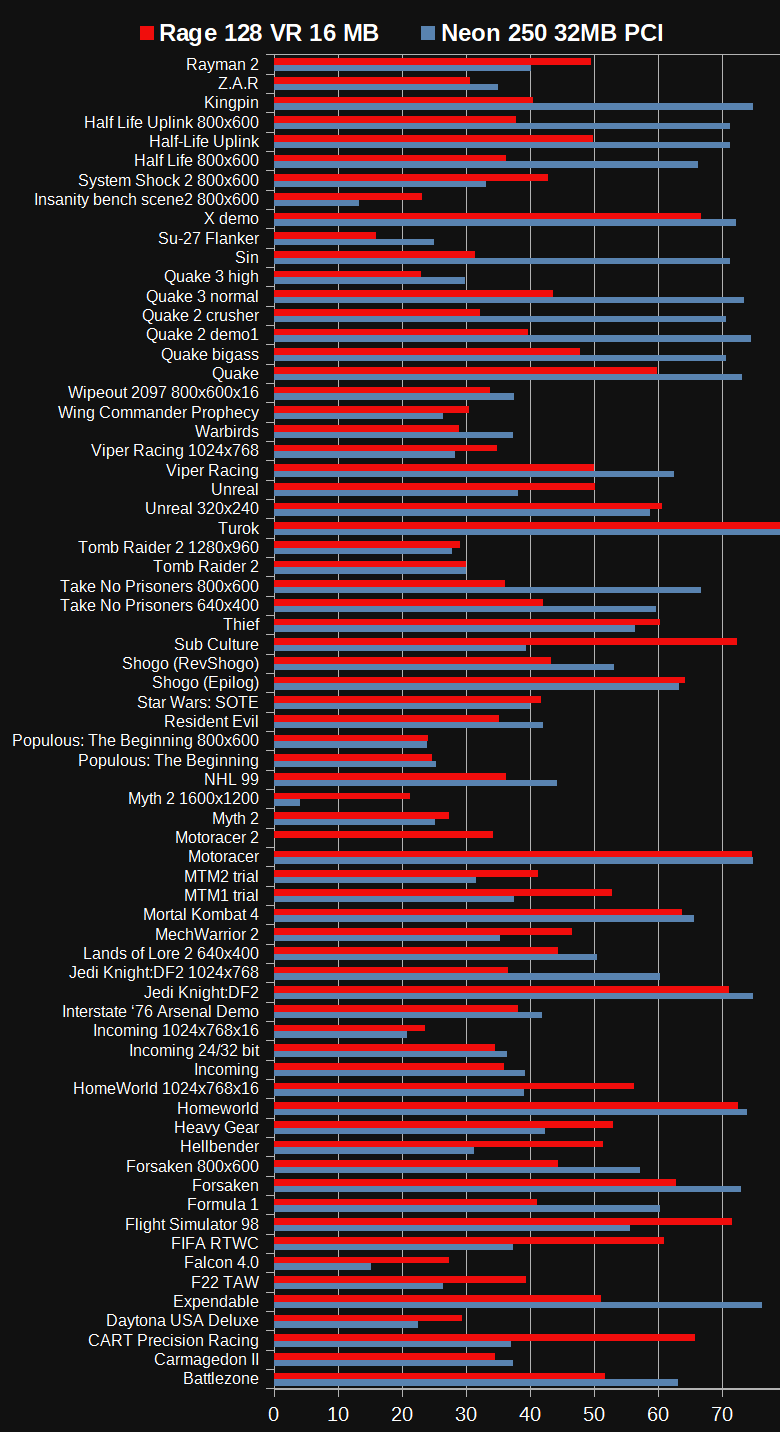

But can she hold its own against competitors? Let's try the PowerVR Neon 250:

Click to see minimal framerates.The first thing that stands out is the massive lead of the Neon in OpenGL, but that is because of the lack of vsync. Should the tested card run without vsync it would jump in default Quake 2 demo from 39.6 to 64.2 frames per second. Overall, the results are very close. No matter how I try to average them, these cards perform too similarly to declare which one is faster. From this, it can be already extrapolated that the SiS 300 with its 128-bit memory bus would be beaten only by a 128-bit memory bus Rage 128. The bandwidth is truly needed to make those dual pipelines fly.

Finishing words

On one hand, the Rage 128 does not have any forward-looking extras, on the other it fixed so many issues of the Rage Pro. On one hand, it is a dual pipeline with the proper performance leap, on the other (spoiler alert) it does not beat similar competitors. Rage 128 gave Ati a solid path forward, but it wasn't the king it was supposed to be.

The Rage XL also couldn't render full-resolution textures, but Rage 128 outright skips many of them.

The Rage XL also couldn't render full-resolution textures, but Rage 128 outright skips many of them.

After all the VR card showed, my conclusion is even the lowest variants of the Rage 128 are viable, largely trouble-free products. This is the threshold when Ati's architecture finally matured. The complicated birth is a testament to how difficult these leaps are. Only in the spring of 1999, the Rage Fury showed up. The promised gaming king variant of the Rage 128 was clocked a tiny bit above 100 MHz but had regular SDRAM memory. Therefore whether a Magnum or Fury is the fastest depends on the resolution used. Of course, there were still a few bugs and shortfalls to remediate. The bilinear filter, the blending mode using dithering to reduce colors, and the clocks were all fixed with the Rage 128 Pro. Despite the transistor count booming again, the new chip with a few more 3d features like DX texture compression was merely a solid refresh. Arriving in the middle of 1999, it did not turn many heads. It was again too little, too late.

ATI managed to pack the Rage 128 with 128-bit bus even onto their All-In-Wonder card. Frequency is 90 MHz. At the bottom is your standard Rage 128 Pro.

At the bottom is your standard Rage 128 Pro.

ATI still wasn't getting under the skin of gamers. To win in retail earlier availability and good initial drivers were needed. The former being easier to fix, Radeon entered the market in the middle of 2000 as the first direct competition to Nvidia's TnL chips. ATI showed cleverly balanced chips and in a rapidly consolidated market suddenly became the only "real" threat to what was shaping into Nvidia's complete domination. At the end of 2001, Radeon 8500 arrived, and while in the beginning it had some performance problems, it also showed a more advanced shading architecture than Nvidia's. In 2002 finally, all the efforts delivered the big success, Radeon 9700, a card with undisputed performance achievements and solid drivers from the start. And since Nvidia tripped over their shoelaces talent of ATI was no longer to be in doubt. What followed was a series of ATI vs Nvidia battles without a clear conclusion. These cyclical encounters are continuing till today, now of course the ATI brand is hidden under AMD. The takeover of ATI had some critics, but the trend of the future seemed to be a convergence of CPU and graphics back into a single device and in this regard, AMD is doing very well. Who knows when, but the day will come when the 3d accelerator as we use it will be unknown to gamers. ATI itself had a strong record of growing through acquisitions, in this order, they absorbed Tseng Labs, Chromatic, Artist, ArtX, XGI, and BitBoys.